Object detection

BadaCost: Multiclass Boosting with Costs

We introduce a multi-class cost-sensitive Boosting algorithm based on a generalization of the Boosting margin, termed multi-class cost-sensitive margin. We prove that the new algorithm, BAdaCost, is a cost-sensitive generalization of SAMME as well as a multi-class generalization of the Cost-sensitive AdaBoost. First, we evaluate the performance with standard multi-class datasets and compare our results with other multi-class cost-sensitive algorithms proposed in the literature. After validating BAdaCost as the best multi-class cost-sensitive boosting we address different applications of the cost sensitive approach. We validate further our algorithm in two relevant computer vision problems: multi-view face and car detection. We show experimentally that multi-view object detection is possible with BAdaCost performing better than the usual one detector per view approach. Moreover, the classifiers (with trees) trained with our approach are more efficient than the one detector per class solution because we have to learn and execute far less decision tree nodes.

Software

We have modified the Piotr Dollar's Matlab toolbox for multiclass detection with trees. The trees are cost-sensitive multiclass classifiers that needs a cost matrix to work. The BAdaCost cost-sensitive multi-class classifier is used to perform object detection.

Download the code at GitHub

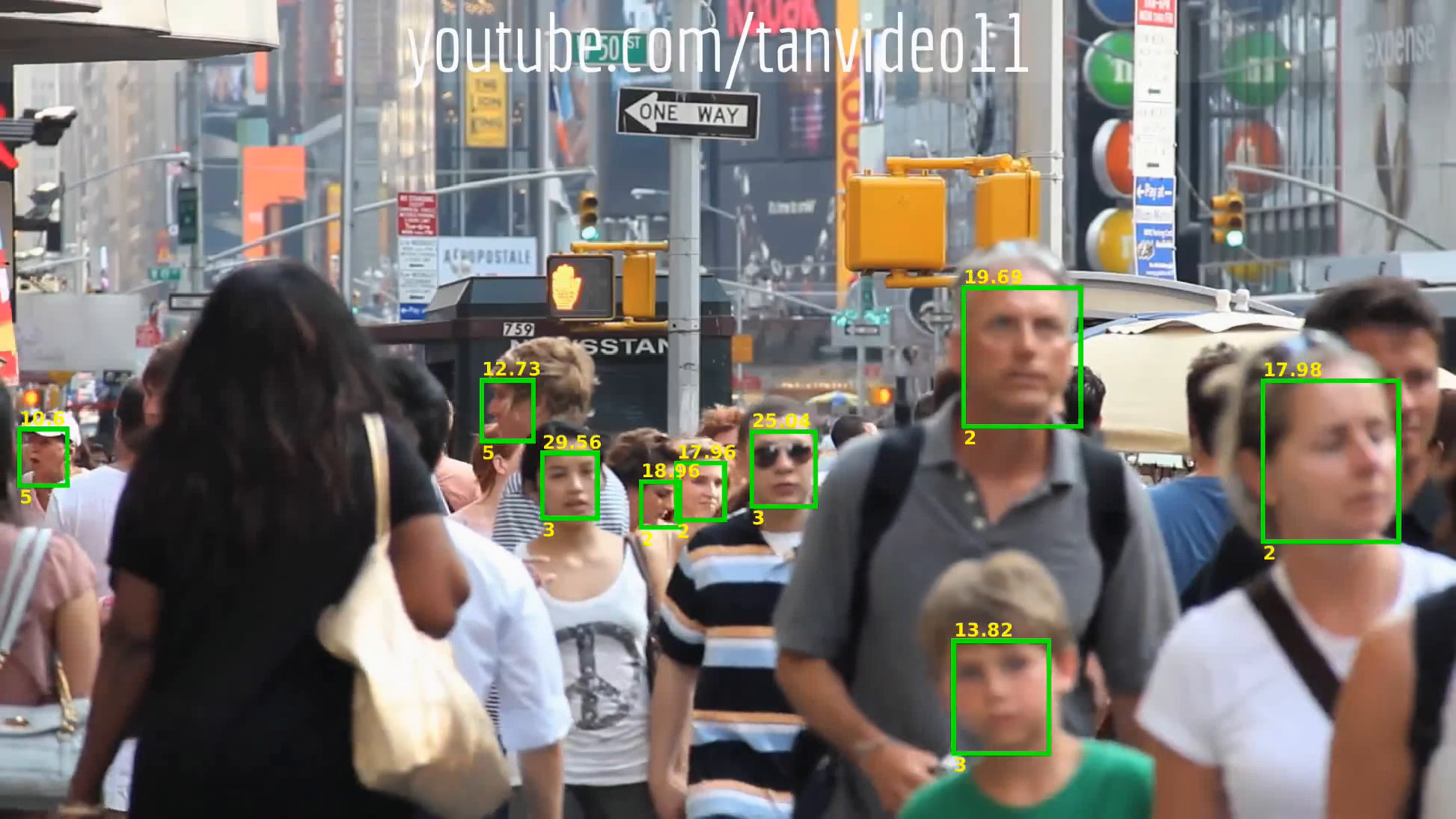

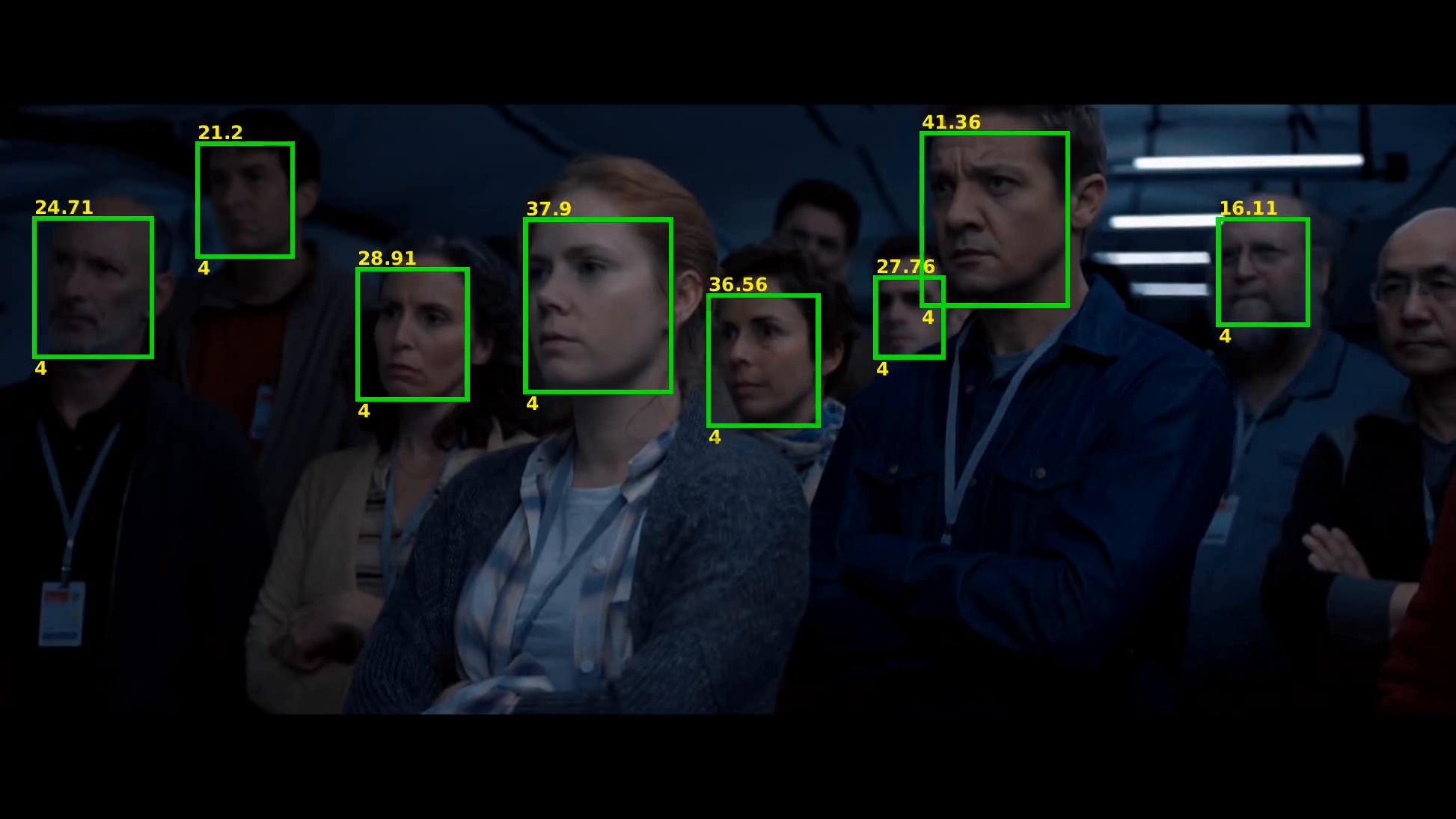

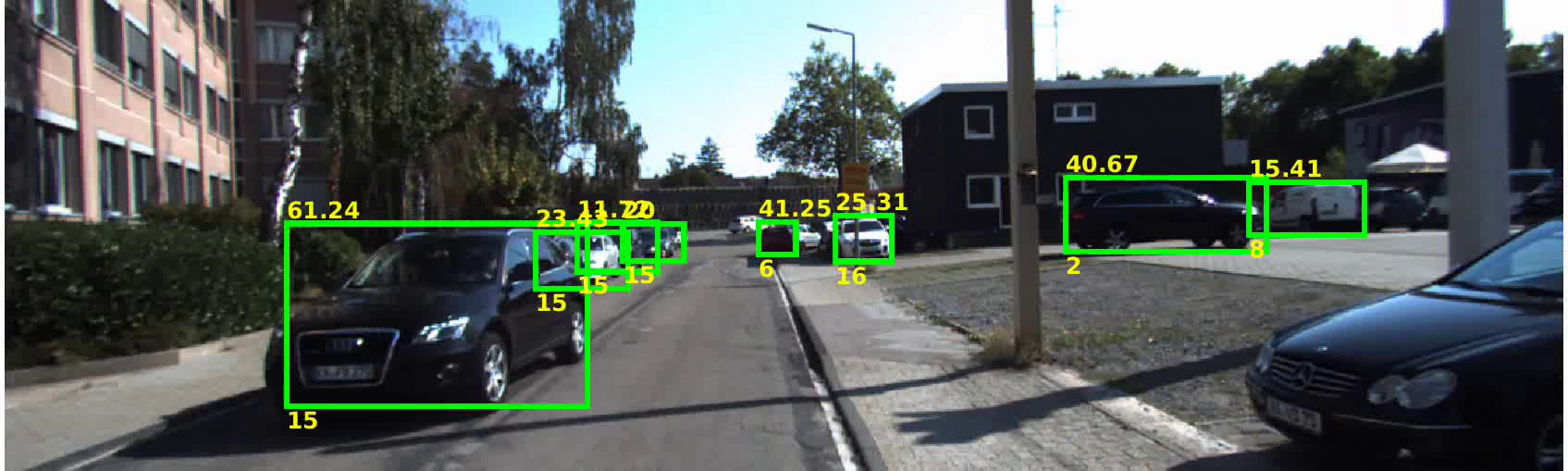

Results (click on images to watch videos)

All videos here have been processed with our implementation of BAdaCost in Matlab. The detection results shown, faces and cars, are all the bounding boxes with score greater or equal to 10 (as in the paper).